Discover more from Garbage Day

Meet The Former Reddit Mod Hunting Foreign Disinformation Operations

"When everyone’s a Chinese/Russian shill, it’s easy to dismiss or miss actual Chinese/Russian shills."

Welcome to Extra Garbage Day! Every other week, I’ll be dropping a bonus Thursday issue just for paying subscribers. To start, these will be Q&As with interesting people I’ve been dying to interview. Let me know what you think.

One of the more magical things about writing about the internet is that anyone can be an expert. We’ve seen this democratization of voices weaponized by grifters over the last few years, but it’s still something I think is worth defending. Sometimes, you just discover someone who is really kickass at what they do. For instance, a couple years ago I came across a Twitter user named @DivestTrump. I’ve mentioned him before in this newsletter.

Back in 2018, he became a main source for a story I did about Russian misinformation on Reddit. I’ve continued to follow him because I really consider his account to be one of the best places to keep track of foreign interference on Reddit. While Reddit doesn’t dominate the internet like it used to, it’s still a huge community — around 400 million users. It’s still in the top 10 most popular social media sites in the world. And, up until it was finally banned three months ago, it was home to r/The_Donald, one of the biggest pro-Trump online communities.

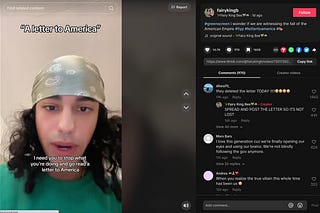

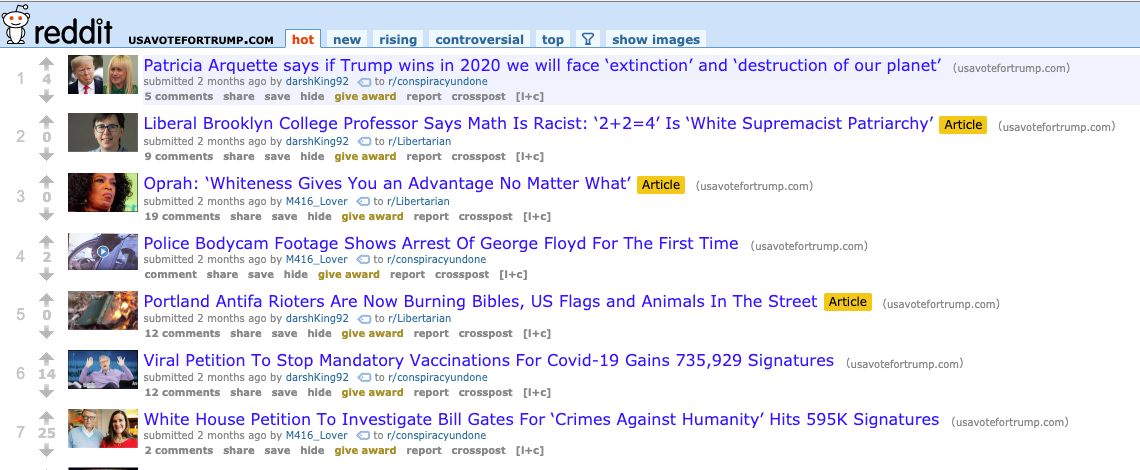

Like a lot of people doing the hard work of researching and analyzing online communities, @DivestTrump doesn’t get paid to do it and, for safety reason, just goes by his user name. Which I’ll be using throughout, but if you’re curious he’s in his 30s and works as an engineer. His process for monitoring Reddit is pretty straightforward — he uses a script to pull domains shared on various political and news-focused subreddits and then digs into the suspicious ones. He has found a pretty impressive amount of proof of foreign interference over the years. Here’s a screenshot of a suspicious domain he sent me a few weeks ago.

While we were finishing up our interview yesterday, news broke that the FBI was accusing Iran and Russia of election interference. As far as we currently know, Iranian state actors were behind an email campaign and video about voter fraud, as well as a text message campaign claiming to be from the Proud Boys street gang intimidating voters.

So the timing for this week’s interview is pretty perfect. @DivestTrump, more than anyone I’ve met, has a really good understanding of exactly what a disinformation campaign looks like and how effective (and ineffective) they can be. The following interview has been edited for flow and clarity.

So I’ve been following you for ages, but I don’t actually know what made you get into this stuff. How did you get into doing this kind of research on Reddit?

I started researching disinformation on Reddit to win a series of online arguments. When I joined Reddit, I just wanted to make memes and crack jokes. I actively avoided politics so my career wouldn’t be impacted on the off-chance I ever got doxxed. When The_Donald shifted from satire to being a hub for hatred and the alt-right, myself and many other moderators pleaded with Reddit admins [and Reddit employees] to reign them in or ban the community outright. When I saw they weren’t taking it seriously, I started trying to take over the subreddit and turn it into a Donald Duck meme community. This was drawing negative attention from the alt-right and my co-mods from some default subreddits asked me to stop so it wouldn’t attract attacks on them and our subreddits since the subreddits were apolitical and we knew what the alt-right was capable of.

Around this time, an unrelated incident led to my co-mods getting doxxed by The_Donald users and the mods stickied the doxx rather than remove it. I started using an alt account to document all the ways The_Donald was breaking Reddit’s own rules. During an AMA with Reddit CEO Steve Huffman (u/spez), I provided hundreds of examples of rule-breaking hate speech from The_Donald. He deflected and made excuses, but I continued documenting examples. I moved from manually searching for hate speech to writing scripts to find examples.

Meanwhile, in my personal life, my sister-in-law started an argument with me claiming there was no proof Russia had interfered with the election. I dug up articles and examples and while there were plenty of examples, actual proof linking Russia to these troll accounts didn’t exist beyond taking Twitter/Facebook/the FBI’s word for it. So, I shifted from using scripts to find hate speech to detecting Russian accounts and domains on The_Donald. I found a few dozen Russian accounts, but like the accounts in the news, there was no smoking gun connecting them to Russia based on public data. I reached out to u/spez and told him what I found. We emailed back and forth and even spoke on the phone. Eventually, he put me in touch with the CTO and some employees on the “anti-evil” team. Some of my finds they deemed normal accounts, but many were released as part of a public announcement Reddit made regarding Russian accounts.

Next, I found [the website] USA Really using link shorteners on The_Donald. Being a Reddit moderator gives you a pretty good eye for picking out suspicious accounts and domains and automation can help with that. I connected the dots from a suspicious URL (geotus.army) directly to the Russian Internet Research Agency. I finally won my internet argument with my sister-in-law, but I gave Reddit the courtesy of investigating them first.

After a week or so, I got tired of waiting and asked if I could make it public, to which I was told I could proceed. I posted about the Russian site, which you wrote about, and everything kind of blew up around me. Spez was outwardly angry with me and even blocked me on Signal, and the rest of the admins went into downplaying it. So, I did what I knew would make a dramatic situation on Reddit even more dramatic, which is to delete my accounts without warning. I ended up interviewing with a lot of mainstream media around the world and the FBI. After things cooled down, I’ve just been hunting disinformation and shitposting on Twitter with a rather low profile. The_Donald was recently banned and I’ll never get credit, but I finally won that argument, too. I’m sure I’m glossing over parts and missing pieces, but that’s the gist of it.

Wait, you spoke to the FBI? That's crazy, I never knew that.

Yes, I emailed back and forth and had a call with the FBI. They're tight lipped, but it was right before infamous Russian troll Alexander Malkevich got arrested.

This might seem like a silly question, but do you think Reddit is a good website haha. Like is it a place you enjoy spending time still?

I rarely browse Reddit for fun anymore. I think Reddit has potential to be a good website, but the leadership isn’t there. It’s easy to block out the “bad” or “good” parts of Reddit depending on your perspective, which is why so many users feel positively about Reddit, but if you look at the website as a whole, it’s a very toxic place. Even things Reddit has “banned” still exist in large volume.

You've found so many good foreign influence operations on Reddit. How would you describe what they typically look like? Like what are these sites actually doing?

Foreign influence operations look different depending on which country is behind it and they’re evolving as they get shut down. Current Russian disinformation blends in with other content in far-left or far-right communities. In many cases, Russia is using American freelancers, often “influencers” with their own following, so at face value, there’s almost no way to distinguish these websites from American far-left or far-right sites. Hyper-partisan content gets a lot of views, even if it’s not a foreign influence campaign, it’s likely a grift or domestic influence campaign.

Iran/Turkey/Saudi/Palestinian campaigns look like the campaigns Russia did in 2016. They use the same tactics with different details and angles. India/Pakistan campaigns are the most difficult to discern from partisan citizens. China has the most sophisticated operation currently. They use state media, paid foreign articles, in parallel with covert social media campaigns. For China/India/Pakistan, you have to look for detailed, prepared comments that respond quicker than someone could reasonably type it out. The same goes for Monsanto/Beyer and other corporate shills. What makes finding Chinese campaigns even more difficult is a Chinese company made an investment in Reddit and so everyone on Reddit wrongly claims Chinese censorship over innocuous incidents. When everyone’s a Chinese/Russian shill, it’s easy to dismiss or miss actual Chinese/Russian shills.

Do you think Reddit is handling misinformation better or worse than places like Facebook or Twitter?

Reddit is handling disinformation both better and worse than other social media companies. Overall, Reddit is behind on enforcement and policies. Reddit stopped running transparency for political ads a few months ago. Reddit doesn’t label foreign state-owned or misleading content. What policies Reddit does have, they don’t enforce consistently.

If Biden wins the election, do you think these misinfo/disinfo ops will become worse? Or more aggressive?

If Biden wins, I think foreign disinformation will continue on the same path, but I think Biden will use the resources of the federal government more aggressively against them. If Biden wins, I can almost guarantee domestic disinformation will increase faster than if Trump wins. All the right-wing grifters and agenda pushers will come out of the woodwork.

[Ed. note: This is literally when the FBI press conference started.]

Did you just see the Iran thing the FBI just put out?

It makes perfect sense. I kind of subtweeted the news of it.

In Iran disinfo stuff you’ve seen, is there anything about their tactics that feels different from Russia or another country? I’m always kinda curious like how different countries do stuff.

Iran is very similar to Russia. I almost wonder if they work together sometimes. The main differences are Iran seems to care less about getting caught and they use QAnon and other unrelated groups to grow followers quickly, then suddenly switch to pushing their actual messaging without deleting old tweets to cover their tracks. Like I said, Iran operations now generally resemble Russian operations four years ago, just swap out the agenda.

Iran is less likely to use Americans, but they'll send university students and young professionals to America to do their work.

One more question for you. Do you think the nature of disinfo/misinfo ops have changed in the last few years? Like are you seeing tactics now that you haven't seen before or does it feel like it's become fairly stagnant?

The new trick from Russia is laundering content through more reputable, American outlets. They run their articles in far-left sites. Otherwise, it's been incremental variations of old tricks.

Like Russian trolls have figured out how to get published in places or do you think it’s just American writers about getting wrapped up in this stuff without knowing it?

Both.

A number of those sites re-published articles from IRA sites, sometimes even including a link back. Probably a traffic-sharing arrangement.

Thank you for reading and supporting Garbage Day! If you’ve been forwarded this email, welcome! Definitely make sure you check out previous Extra Garbage Days:

And finally, we can’t spend an entire Garbage Day issue talking about Reddit and not include an extremely cursed Reddit Relationship post. It looks like another Reddit boyfriend forgot how to wipe his ass again:

***Typos in this email aren’t on purpose, but sometimes they happen***

Subscribe to Garbage Day

A newsletter about having fun online.